cifar10 train no gpu utilization, full gpu memory usage, system cpu full loading · Issue #7339 · tensorflow/models · GitHub

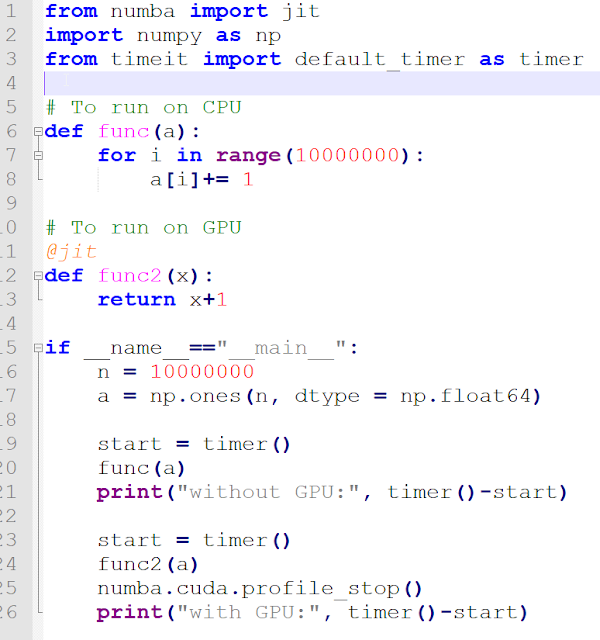

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

How to dedicate your laptop GPU to TensorFlow only, on Ubuntu 18.04. | by Manu NALEPA | Towards Data Science

Python API Transformer.from_pretrained support directly to load on GPU · Issue #2480 · facebookresearch/fairseq · GitHub